Scaling up Graph Neural Networks

Decoupled GNN architecture

Qualifying Examinations report.

Recent years have witnessed the burgeoning of services based on data represented by graphs, which leads to rapid increase in the amount and complexity of such graph data. Graph Neural Networks (GNNs) are specialized neural models designed to represent and process graph data, and is becoming increasingly popular thanks to their impressive performance on a wide range of graph learning tasks. Most of these models, however, are known to be difficult to scale up. Our examination suggests that the GNN scalability bottleneck is usually the iterative message-passing propagation procedure, which is tightly coupled with the graph structure. Hence, improving the performance of the expensive graph propagation by graph related techniques becomes the key in scaling up GNNs.

In this report, we explore the scalability issue on different graph variants and GNN designs, and propose our approaches to scale up GNN to million- or even billion-scale graphs by simplifying the graph propagation operation.

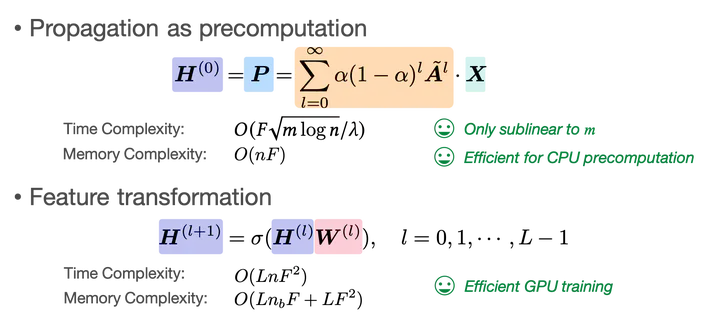

We first propose SCARA, a scalable GNN with feature-oriented optimization for graph computation, to address the propagation bottleneck by decoupling it as precomputation. SCARA efficiently computes graph embedding from the dimension of node features, and further selects and reuses feature computation results to reduce overhead. Theoretical analysis indicates that our model achieves sub-linear time complexity with a guaranteed precision in propagation process as well as GNN training and inference. We conduct extensive experiments on various datasets to evaluate the efficacy and efficiency of our model. Performance comparison with baselines shows that SCARA can reach up to 800× graph propagation acceleration than current state-of-the-art methods with fast convergence and comparable accuracy.

Next, we specifically study the scalability issue of heterophilous GNN, a family of GNNs that specializes in learning graphs where connected nodes tend to have different labels. We propose a scalable model, LD2, which simplifies the learning procedure by decoupling graph propagation and generating expressive embeddings prior to training. We perform theoretical analysis to demonstrate that LD2 realizes optimal time complexity in training, as well as a memory footprint that remains independent of the graph scale. The capability of our model is evaluated by extensive experiments. Being lightweight in minibatch training on large-scale heterophilous graphs, it achieves up to 15× speed improvement and efficient memory utilization, while maintaining comparable or better performance than the baselines.